Over the past several years there has been a push toward using scientific evidence as the foundation for nutrition and health decisions. While this is something the industry desperately needs, there are pitfalls that come along with some people claiming to use science. The appearance of using science when in fact one is not following what good scientific practice is, can create more harm than good in some scenarios.

A few weeks ago I had the privilege to talk with Danny Lennon from Sigma Nutrition on this very topic. That hour with Danny was one of the most fruitful conversations I have had in years and I wanted to take the concepts we discussed and turn it into an article. See the end of the the article for a link to the conversation.

How Science Should Be Used

Often times individuals will have a favorite diet scheme or training scheme and cite individuals studies to substantiate their own hypothesis and fall into the idea of confirmation bias. It is really easy to have your own pet theory on something and then go find a single research study that can kind of support it.

One of the hard parts about science is it takes a lot of admitting your wrong, searching for and asking deep questions. A truly science based approach should really be about a concerted effort to question your own current understanding and trying to grow. So instead of having an idea and just seeking out the evidence to support that one idea, it’s really generating your own hypothesis and then trying to prove it wrong.

I think this is the heart of where the disconnect lies. We have this assumption that science is gathering the evidence to prove yourself right. However, when you boil it down to its core, science is really trying to gather your evidence to prove your hypothesis wrong.

As individuals we should be more concerned with finding the truth than we care about proving our answers right. I think that’s a really important mindset that we should try to bring whenever we kind of decide we’re going to make evidence based decisions.

“We have this assumption that science is gathering the evidence to prove yourself right; when you kind boil it down to its core, science is really trying to gather the evidence to prove your hypothesis wrong.”

The Importance of Context

One of the really important things to know about science is it is an iterative process. Meaning that it makes progress small steps at a time, not huge leaps and bounds (there are some minor exceptions to this).

This is important to remember as context is critical when using studies to make decisions. You really need to take research studies in context because a single study is only as good as its methodology; it’s only as good as its population; it’s only as good as the analytic tools that are used for the data.

Whenever you are trying to find an answer, get as broad as scope and as much context regarding that question as you can as taking single study out of isolation can often times lead you down the wrong path of inquiry. It is imperative to understand how a single study fits within, in that whole context.

For example, you’re trying to determine if a certain supplement is effective or if certain foods lead to heart disease or things like that. It’s really easy to find a single study that can fall on either side of the fence, so you gotta really take things in context.

One of the great things about the nutrition and exercise physiology literature is that it’s really kind of ripe with a lot of these intellectual issues, the kind where data is conflicting.

Perfect examples are training studies where people will look at rep scheme’s or weight scheme’s and a lot of times they won’t find a significant difference between certain types of rep scheme’s for strength let’s say, and you’ll draw the conclusion that oh it doesn’t matter if you train in one to three rep range or the eight to ten reams range doesn’t really make a matter for strength.

Well a lot of times, just given the nature of the studies with high variation and really small sample sizes is, we don’t really have the adequate statistical power to draw that conclusion. Your chance of a type two error, meaning that you don’t find the signal and the noise is really high.

So you might read one study and say it doesn’t matter based on this study but when you really kind of step back and look at all of the literature and you also use some of your own experiences, you know that’s not the case. So it’s really important to understand that each individual study has these limitations and that if you just use that one single study you would come to the wrong conclusion.

Statistics

Mark Twain famously said, “lies, damned lies, and statistics”.

The truth about statistics isn’t that bleak, in fact is is one of the most powerful mathematical tools we have, but we have to remember that statistics has limitations, especially in the context of human physiology. It is important to remember that there is a difference between statistical significance, which is just achieving an arbitrary probability number, verses physiological meaningfulness.

A perfect example of this is a recent study examining the effect of branched-chain amino acids supplements versus carbohydrate supplements on fat loss. If we take the paper at face value, that there was a statistically significant reduction of fat mass in the branched-chain acids group but there wasn’t in the carbohydrate group (See figure 4 of this paper to see the relevant data). However, when you look at the data, the carbohydrate group lost twice as much fat as the branched-chain amino acids group in this one study. And while one achieves statistical significance, when you actually look at the data there’s a big disconnect between this arbitrary P value that was reached and the actual meaningfulness of the outcome.

So if I was someone who was trying lose some fat and I could achieve statistical significance but only loose .5 kilograms, or I could not achieve statistical significance and lose 1.5 kilograms. Which one would you choose?

Sometimes you have to take into consideration the limitations of statistics to know that there is a lot of times disconnects between statistics significance and physiological meaningfulness, so we need to really look at what are the actual data, what are the effect sizes and take that into context too.

The Hierarchy of Evidence

Now we need to talk about the “hierarchy of evidence” that is put because for example, we see meta-analysis are often seen as the goal standard but there are also certain drawbacks to 100% hanging our hat on something found in meta-analysis.

A meta-analysis is only as good as the quality of the studies that are included in the meta-analysis. So if you have some severely flawed studies in the meta-analysis we can’t really rely on the results of that meta-analysis.

Another way to look at it is that a meta-analysis is essentially “broad strokes”. It is a really generalizable picture that applies to more almost population data. We can learn a lot from individual randomized control trials, and even taking it a step further from looking at it in individual data points or outliers in a study because again that’s going to be looking at on an individual basis that people may be outside of those means that you mention.

Whenever you are looking for clarity on a topic or trying to answer a question the randomized control trials are also really important as well as the mechanistic studies. A lot of times, to really answer a question you need to know that the mechanism of action is actually true, that it has been validated more than once, and that randomized control trials give result consistent with the mechanism and that the trials have fairly repeatable effects sizes. Lastly, the meta-analysis you see should show a general consensus among the literature.

One of the ways that we can show that why you need all three to really have a clear picture is I think diet and heart disease is a perfect example. Or even diet and cancer. When we look at mechanisms of heart disease or mechanisms of cancer they’re really all over the map (likely due to the heterogeneous nature of the diseases).

We have some general good guiding principles of what those answers are but we don’t have a single defining mechanism. When we get to randomized control trials on diet and heart disease, we get varying results across different populations, across different dietary interventions, across different adherence levels and myriad other thigns. This means that we have meta-analysis that use these studies to draw these conclusions from, and that’s a perfect example of where meta-analysis break down.

If we look at the meta-analysis on saturated fat and heart disease, I’m actually writing a paper right now on that, but, so it’s kind of a pointient topic, but there’s been three meta-analysis that are well conducted on saturated fat and heart disease and they all find fairly disparate different findings.

So that kind of shows you where, if we don’t really have a really clear mechanism, we have randomized control trials that aren’t really coherent and congruent amongst themselves, then we get these meta-analysis that show a different picture.

That’s a really good way to show this hierarchy of evidence and where we really need a solid foundation of mechanism, we really need a solid foundation to randomized control trials and then we really need some really good solid meta-analysis, and any hitch along the way kind of amplifies that effect up the hierarchy.

Anecdotes Vs. Scientific “Truths”

Another interesting aspect of the fitness and nutrition industry is that due to the current state of the internet and technology it’s literally never been easier for vast numbers of people to share their experiences. Now we have large numbers of people who are not only conducting their own personal and n=1 experiments, and because of internet forums and they are now being able to collate these reports and share them in large numbers.

As mentioned by Danny Lennon in my conversation with him on Sigma Nutrition radio, it completely fair for someone to claim that the results of such a personal experiment are unlikely to be valid enough to draw conclusions from. Yet, there becomes a point when you see thousands of people reporting similar things with personal experiments they have done that it may be something worth looking into.

Now this is a topic I battle with this myself. I have the scientist side that has been trained into me for over a decade, but I also have a pragmatic side.

Pragmatic side first. Pragmatically, what I really care about at the end of the day is how can we get people results, whatever it is. I think that these antidotes and these experiences manifest in practical results, I think that’s fantastic and I don’t really care that the mechanism is true.

If we get these anecdotal stories and we get a kind of mass building of people that have experimented on themselves and found something that works, I am in support of that because a lot of people will benefit.

Now, whether it works in the way they think or not is another question; a very, very, important one. I think we need to be very careful and realize that these are not scientific truths. Because someone lost weight following a ketogenic diet does not make it the sole tool for weightloss or the superior weight loss method.

These types of anecdotes are places to generate hypothesis from. We need to then ferret it out in the literature and do some experiments and some controlled science and really figure out the truth (We can talk Popperian science at a later time). Individuals are prone to confirmation biases, myself included. In our industry we all have a lot of things that we have confirmation biases on. We don’t really do honest reflection; anything that works we say works because of a certain reason. Anything that doesn’t work we kind of just forget about. In the health and fitness world conformation biasis is really strong.

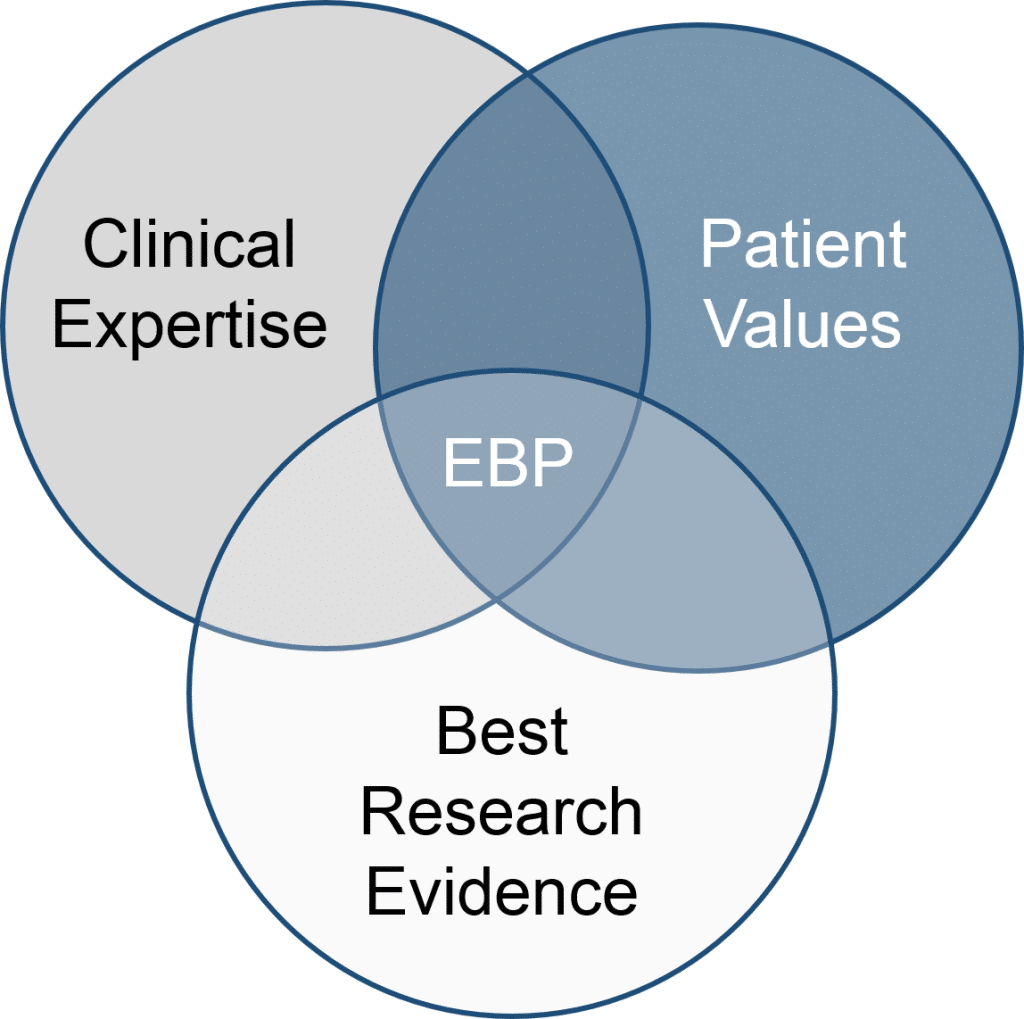

This is where the entire idea of the whole model of Evidence Based Practice comes into play. There is more than just research studies, there is clinical expertise (i.e. anecdotes) as well as patient values.

The Wrap Up

Pursuing evidence/science based methods for health and fitness is essential to making forward progress. We need to remain honest about what science truly is, humble about the actual depth of our knowledge, and pursue the truth over being right.

Nutrition and Exercise Physiology is a relative new field and our knowledge is currently limited. We need to acknowledge that pragmatically there is a place in our industry for pursuing anecdotal evidence and that they can generate high-quality testable hypotheses/ideas.

Most importantly keep an open mind, keep learning, keep growing, and keep searching for better answers.